Pose estimation and semantic segmentation is computed jointly by aligning the LiDAR points to line segments from the images. In the proposed system, a movable platform collects both intensity images and 2D LiDAR information. This paper presents an algorithm for indoor layout estimation and reconstruction through the fusion of a sequence of captured images and LiDAR data sets. Notice a good children hypothesis was generated at frame 654 with a low prior, but as the robot approaches the opening and accumulates more evidences along the travel, the correct hypothesis becomes the winner at frame 737 The Bayesian filter identifies the best hypothesis for most of the time.

The red line shows the accuracy of the hypothesis with the maximum posterior probability. The blue line shows the weighted accuracy among of the existing hypotheses, where the weight of each hypothesis is equal to its posterior probability. The green line shows the maximum accuracy among the existing hypotheses. The third graph plots the accuracy of the system at each time frame (the accuracy is evaluated every 10 frames). Similar phenomena occur several times throughout the video. As the robot approached the opening, more point features around the opening were observed and thus, the probability of the blue hypothesis dropped and was removed from the set at frame 190. Thus, the posterior probability of the red hypothesis started to decrease and eventually it was removed from the set, while both the blue and green hypotheses had a higher and higher posterior probability. Both the blue and the green hypotheses (the correct hypothesis) are children hypotheses of the red one with the correct opening structure with different width generated at frame 105 and frame 147 respectively. The red hypothesis is a simple three-wall hypothesis where each wall intersects with its adjacent walls. We highlighted several hypotheses to illustrate our method. Every time when a set of children hypotheses are generated, the posterior probability of the best hypothesis drops.

The second graph shows the posterior probability of the hypotheses at each frame. In our experiments, a set of children hypotheses are generated every 20 frames or when less than 70% of the current image is explained by existing hypotheses.

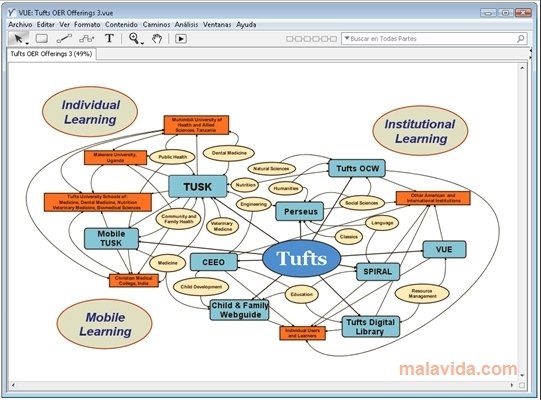

Notice that our method efficiently focuses on a small set of plausible hypotheses (at most 27 hypotheses in this case) at each frame. The top graph shows the number of hypotheses active in each frame. The horizontal axis of the graphs is the time frame of the video. made by the VUE team on their forums, new features include: tools for dynamic presentation of maps, map merge and analysis tools,Įnhanced keyword tagging and search capabilities, support for semantic mapping using ontologies, expanded search of online resources such as Flickr, Yahoo, Twitter, or PubMed.Bayesian filtering results of Dataset L.

The project's most recent release, VUE 3, has added many new features which distinguish it from traditional concept mapping tools. Tufts University's VUE development team has coordinated releases of the VUE project. Using VUE's concept mapping interface, faculty and students design semantic networks of digital resources drawn from digital libraries, local and remote file systems. VUE provides a flexible visual environment for structuring, presenting, and sharing digital information. The VUE project at Tufts UIT Academic Technology is focused on creating flexible tools for managing and integrating digital resources in support of teaching, learning and research.

0 kommentar(er)

0 kommentar(er)